Weekly reading: AI Agents

The portuguese version of this content can be found here.

I decided to write a weekly short article about my readings.

This week I focused on a topic: AI Agents.

I still think the definition of an AI Agent is forming, but generally, I would say it is a computer program (often with an LLM in the flow) that can autonomously perform tasks in an environment to achieve certain goals. Anyway, it’s easier to understand this concept through an example.

Example

Today we can approach a chatGPT or Gemini and request a travel itinerary with a simple prompt like this:

Plan a 2-day itinerary. I’m going to Salt Spring Island in BC, Canada. I’ll arrive on Friday and leave on Sunday.

The LLMs will generate useful responses and will certainly help put together a real itinerary. However, with an AI agent, we could do something even more complete.

In this case, the agent could, for example, access a weather forecast API to check if it’s going to rain and suggest visiting a museum instead of a beach.

Additionally, it could access services like Booking.com to suggest the best hotel prices, taking into account the location of the attractions it recommended. In other words, an agent uses LLMs and also accesses other APIs and functions iteratively to generate something more complete and of better quality.

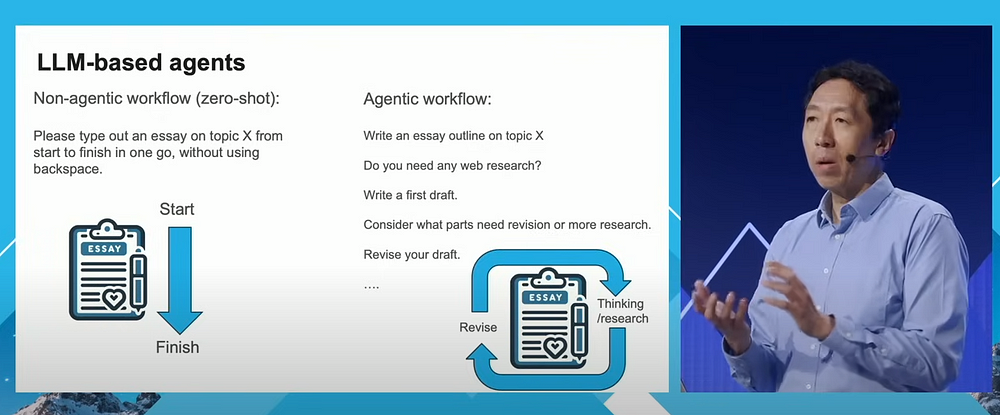

In this lecture, Andrew details what an agent workflow is and how it differs from a simple LLM prompt.

He also presents several interesting examples, such as computational vision agents that analyze a video, identify a shark, measure the distance to people, and generate a new video marking this information.

Another interesting and practical example is dividing the task of writing code between an agent that writes and another that generates tests. The agent that generates the tests runs the code and returns feedback so that the first agent can improve the code.

Andrew Ng comments at the end about the current limitations of agents and how to circumvent them. I highly recommend watching the full lecture.

If you prefer reading instead of watching a video, I recommend this article from MIT Technology Review: What are AI agents?

The article begins by trying to define what an AI agent is and mentions that many of them are multimodal, capable of processing text, audio, and video. I found it interesting that the article addresses the issue that the concept of agents is not new; it has gained popularity due to LLMs that facilitate their creation, generating various possibilities that were previously very difficult to achieve.

The most interesting part of the article discusses the limitations of agents, especially those powered by LLMs:

- Inability for complete reasoning and need for human supervision

- Contextualization problems: AI agents have difficulties in fully understanding the context, which can lead to inappropriate responses or actions.

- Task tracking loss: After some time, AI agents may lose track of what they are working on, which can lead to failures in executing ongoing tasks.

- Context window limitations: AI systems have limited context windows, meaning they can only consider a limited amount of data at a time, affecting their ability to remember past interactions or handle longer interactions.

Conclusions

The goal of these posts is to leverage my studies and readings to share knowledge with others and also receive feedback to start the discussion on these topics. If you liked this format, please let me know!

#AI #LLM #aiAgent #MachineLearning #ArtificialIntelligence #innovation