Using OpenAI API to make a coffee barista — Part 1

Code available in: https://github.com/lfomendes/guaxinim-barista

Like many other geeks I’ve been getting into the specialty coffee scene. It’s been an exciting and caffeine-fueled journey! Brewing a great cup is simple to start but tricky to master.

I decided to blend my love for coffee with my interest in new technologies and tools.

My idea is to create a web app that uses LLMs (genAI) to help people brew better coffee while allowing me to experiment with new tools and APIs. I’ll share the code publicly and post insights in parts on Medium.

In this first installment, I’ll be building the app using Streamlit, the OpenAI API, and the Windsurf IDE. I know it might have some issues like occasional hallucinations and a “not-so-polished” interface. My apologies to all designers out there!

For those not familiar with Portuguese, ‘guaxinim’ (pronounced gwa-shee-neem) means raccoon. Why raccoons? They’re adorable, have human-like hands, and just might dream of crafting the perfect brew themselves!

So follow me in this caffeinated journey

The MVP

Let’s dive into the fun part: here’s the interface we’ve created so far.

And a video of the app in action

I think it is a good enough MVP with all the necessary capabilities and it took me only some hours to create with these new tools.

The code

The flow of the app is easy, the person chooses an action from

- Teach me how to brew from zero

- Help me improve my brew

- Ask anything coffee related to the web-barista

Each action will show a different interface below but all of them will call an OpenAI api using its chat completion command.

For this mvp my folder is organized like this

guaxinim/

├── guaxinim/

│ ├── __init__.py

│ ├── core/

│ │ ├── __init__.py

│ │ ├── guaxinim_bot.py

│ │ └── coffee_data.py

│ ├── ui/

│ │ ├── __init__.py

│ │ └── home.py

│ └── utils/

│ └── __init__.py

├── tests/

│ └── unit/

├── main.py

├── README.md

├── requirements.txt

├── .gitignore

└── env.exampleThe Streamlit makes it easy to build an interface with only python code, almost everything for the interface is inside the home.py file.

Everything will be written in python using the windsurf IDE, I will try to use it as planned with some propts like this

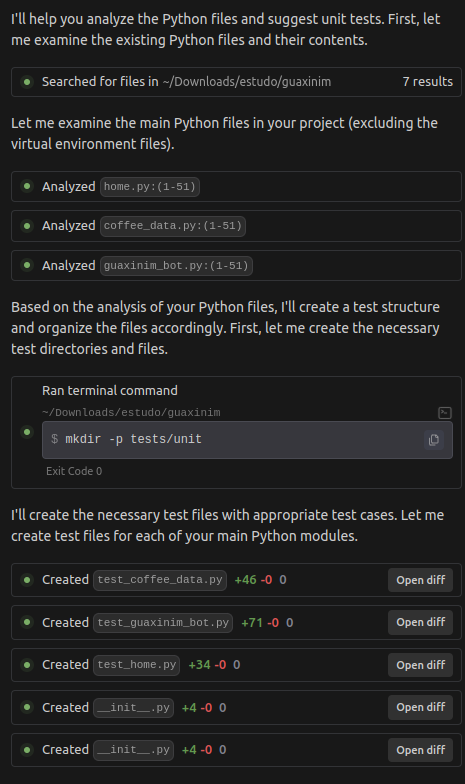

Analyze all .py files and suggest possible unit tests, create the necessary

folders and organize the files accordinglyJust so you understand that was the result for this prompt at the time

Prompts and OpenAI api

I created 3 different prompts, one for each action, they can be found on the guaxinim_bot.py file. I’m using the chat completions from Open AI.

Improve prompt

system

You are a professional coffee barista expert.

user

Analyze these coffee parameters and suggest improvements: Issue:

Too acidic Coffee amount: 20.0g Water amount: 180.0ml Improve prompt

You are a professional coffee barista with years of experience.

You are teaching someone how to make an excellent cup of coffee using

the Cold Brew method. Please provide a detailed, step-by-step guide that

includes: 1. Required equipment 2. Recommended coffee-to-water ratio 3.

Grind size recommendation 4. Water temperature 5. Detailed brewing steps

6. Common mistakes to avoid 7. Tips for achieving the best results

Format your response in markdown for better readability.Coffee question prompt

system

You are a professional coffee barista expert.

user

Check if the following question is coffee-related:

If yes: Provide a detailed answer

If no: Reply with 'I only answer questions about coffee.'

Question: What is the best place to buy coffee?I’ve added a basic security feature to ensure the barista only answers coffee-related questions. I’ve tried to “hack” this but it seems that this protection is good enough for now.

Using OpenAI API is very straightforward, as demonstrated by the following code snippet:

try:

response = self.client.chat.completions.create(

model=self.GPT_MODEL,

messages=[

{

"role": "system",

"content": "You are a professional coffee barista expert.",

},

{"role": "user", "content": basic_prompt + query},

],

temperature=0.2,

max_tokens=500,

store=True,

)

return response.choices[0].message.content

except (APIError, APIConnectionError) as e:

return f"Error processing question: {str(e)}"You can check the documentation for chat completion here. I’m using 0.2 temperature to make it more focused and deterministic.

Next Steps

I’m very happy with the current state of this first version. It is available in the tag v1.0.0 (git checkout v1.0.0) in the git repository.

But now I want to take it to the next level by collecting some curated information about coffee from experts like James Hoffman and improve the barista to use this knowledge and even point the user to the source of information.

This is usually what Retrieval-Augmented Generation (RAG) is used for, so in the next post I will share my experience creating a simple RAG for this barista APP.

Thanks for reading! Feel free to leave any questions or suggestions in the comments.

The second part is already out https://medium.com/me/stats/post/73ef598aa0f8