Thoughts on: RAG, Hybrid Search, and Rank Fusion

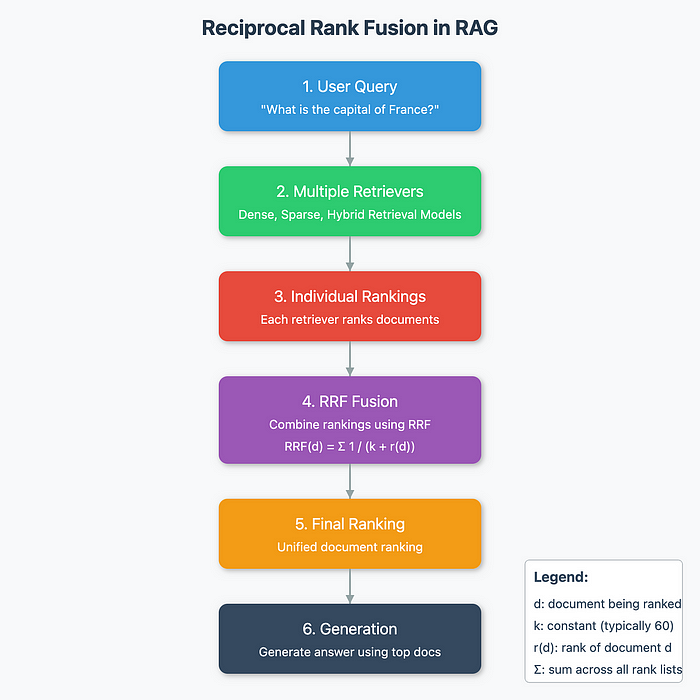

Recently, I came across an interesting post: “Reciprocal Rank Fusion (RRF) explained in 4 mins — How to score results from multiple retrieval methods in RAG”.

The article is straightforward and quickly explains the concept of RAGs (Retrieval-Augmented Generation), highlighting the great importance of the document retrieval phase in tackling these challenges.

I would like to share some toughts on these challenges and techonologies. But it’s worth starting by defining some concepts.

RAG (Retrieval-Augmented Generation)

It’s a technique that combines the retrieval of relevant information from a database with text generation by LLMs. This enables generating responses that are not only more accurate but also more contextual. This is particularly useful when applying LLM models in specific environments, such as answering internal company questions.

Hybrid Search

A fundamental concept in information retrieval systems, integrating multiple search strategies to enhance the relevance and accuracy of the results. This approach uses traditional models like BM25, effective for keyword-based searches, along with semantic methods based on embeddings that capture contextual and semantic similarities. By combining these two approaches, hybrid search balances exact term matching and contextual understanding, delivering richer and more relevant results.

Rank Aggregation Problem

It involves merging multiple ranked lists or preference orders into a single consensus ranking.

We are living in the era of LLMs, strongly driven by solutions like ChatGPT and Gemini. But the more we use these solutions, the more we realize the significant challenge of model “hallucinations.”

RAGs are extremely valuable in this context, as they ensure that generic LLMs operate effectively in specific scenarios.

RAGs heavily depend on this document retrieval stage, yet it’s interesting to note that search engines have become so common in our daily lives that we often overlook the complexity of their challenges.

While today we have modern models that perform semantic search using text embeddings, there are still many situations where more traditional algorithms, like BM25, work better. Therefore, many companies are investing in integrating different search techniques (hybrid search). This is where Reciprocal Rank Fusion (RRF) may come in as an efficient tool for combining search results.

Besides RRF, there are other ways to merge/combine searches, such as:

* Conducting an initial search with BM25 and then adjusting the order using semantic similarity.

* Combining the results of both methodologies into a single score.

* Creating a learn-to-rank model that uses these scores along with contextual information and query type to improve the final ranking.

When we think about RRF (Reciprocal Rank Fusion), we can draw an interesting analogy with the Oscar voting process. The judges choose the best film by listing their preferences in order. In the end, these lists need to be combined to determine the winner. This aggregation process is not simple and involves complex mathematical challenges, such as those described in the Arrow’s Impossibility Theorem, which demonstrates how in certain situations it is not possible to reach a perfect solution.

I might have strayed a bit, but the conclusion is that while generative AIs represent a revolutionary technology, their true value arises when they are combined with search systems and other technologies, forming truly intelligent agents. It’s crucial to remember that there is no single solution for complex problems like document retrieval. Therefore, it is essential to understand the different technologies available, as well as their strengths and weaknesses, to combine them effectively.

Disclaimer: I used a LLM model to review the text of this post.